A ‘Digital Mask’ Could Help Protect Patients’ Medical Records

Scientists develop a technology that prevents potentially sensitive personal biometric information from being extracted or shared.

A group of scientists has proposed a new piece of artificial intelligence technology to protect and encrypt patients’ medical data. This could be vital to preventing misuse of data in several other spheres — as health information comprises some of the most sensitive details of a person’s life.

Originally intended as an authentication measure to ensure that only users could access their own data, the widespread universal use of biometric information, such as unique fingerprints and facial identity, as a password to personal information — especially within healthcare — has interlinked different, disparate details of people together. All of these can be — and have been — easily compromised by breaching just one network. To combat this, a group of software scientists and ophthalmologists have designed software to protect valuable user data from such breaches and compromises.

The scientists who published their experiment and its findings in Nature Medicine yesterday, acknowledge the risks that come with the storage of biometric information on medical databases. However, at the same time, they are also aware that facial images are often crucial for pathologizing certain ocular — relating to eyesight — disorders and diseases from facial data, and thus opposed some existing suggested methods of privacy protection such as blurring facial details or cropping some identifiable features.

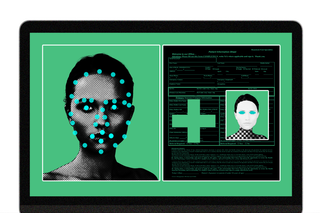

Hence, they designed software that would capture and then artificially reconstruct people’s faces digitally, but in the process would “digitally mask” biometric information — such as the iris and periocular area (the area surrounding the eyeball, such as forehead wrinkles) — removing them from the final reconstruction. This way, patients’ biometric information and privacy can be protected without withholding vital medical information from medical practitioners.

One factor driving the urgency of developing such software was also the Covid19 pandemic and the dependence on technology it brought along with itself. “During the COVID-19 pandemic, we had to turn to consultations over the phone or by video link rather than in person. Remote healthcare for eye diseases requires patients to share a large amount of digital facial information. Patients want to know that their potentially sensitive information is secure and that their privacy is protected,” Haotian Lin, one of the ophthalmologists in the group that devised the new software, explained to ScienceDaily.

To check the efficiency of their software, the scientists also ran clinical trials, where they conducted ocular diagnoses of the patients based on their original facial records and the software-reconstructed videos, and then examined the consistency in the two diagnoses. The scientists further write in their study that “identity removal validation was also used to show whether the Digital Mask (DM) could effectively remove personal biometric attributes.” The scientists also “conducted an artificial intelligence (AI)-powered reidentification validation to evaluate the performance of the DM in evading recognition systems.”

Related on The Swaddle:

How Facial Recognition AI Reinforces Discrimination Against Trans People

The results of the trial indicated that diagnoses on artificially reconstructed, digitally masked faces were largely consistent with diagnoses done on patients’ original faces, indicating the feasibility of using reconstructions for clinical purposes. Further, the researchers observed that facial recognition algorithms, while effective at identifying patients from their original images, and somewhat efficient at identifying them from cropped images too, were largely unsuccessful in identifying patients based on their digitally masked faces. “When using the DM, the performance of face recognition was significantly degraded,” the scientists write. Professor Patrick Yu-Wai-Man, a contributing author to the study, told Metro, “Digital masking offers a pragmatic approach to safeguarding patient privacy while still allowing the information to be useful to clinicians.”

In the 21st century, data has become a precious commodity, with many even calling it “the new oil” to signify its value as a resource. Businesses with access to precise personal data, for instance, can reach a dedicated customer base through hyper-targeted ads on the internet. Websites may offer their services for free, and then mine user information that they can sell to businesses to help them reach potential customers. There can also be graver consequences, like personal information falling into the hands of stalkers and other potentially harmful persons through a data black market, or being used by the police and state for surveillance without the consent and acknowledgment of the people being watched, as a means to discourage and curb dissent.

In recent times, with more awareness around personal data, people have demanded more accountability from technological companies and databases, that hold their data. In some cases, people have also consciously tried to guard their identity and personal information, by using anonymous profiles, virtual private networks, and other safeguards. However, healthcare is one sphere where people still have to enter very specific, accurate, and at times highly private, personal information into a larger database. A significant part of modern medicine depends also on intricate details about people’s lifestyles and daily routines, through which one can also determine their consumption patterns and other sensitive information.

This makes medical data a goldmine of personal information — and glaringly vulnerable to exploitation. Currently, there exist no robust safeguards against the extraction and use of private data for other purposes. But with the digitization of healthcare only increasing, the gaps in privacy only emerge as more urgent than ever. The Indian government, for instance, charted out a National Digital Health Mission (NDHM) in the near future to digitally maintain records of every patient. This raised concerns about the use of medical data for intensive surveillance by the state itself. In absence of privacy concerns, even health data is prone to leaks and theft. One such breach involved data of Delhi’s Covid patients being revealed on the state government’s websites in early 2021, with a report stating “both private hospitals and diagnostic centers were treating medical records with shocking disregard for patient privacy or confidentiality.”

Sidra Jawed, a lawyer who deals with worker safety, inclusion, and privacy, pointed out how there is no legal framework to guide the government on obtaining consent and protecting patient data that will prevent the misuse of data drawn in from the scheme. “…the voluntary deployment of digital health ID without a strong legal framework to protect health data has created a regulatory vacuum, making it difficult to implement NDHM’s health data management, which includes data exchange, privacy, and strategic control,” she explained. Notably, the NDHM in its formulation relied upon the then-pending approval of the Personal Data Protection Bill, 2019. As of 2022, that bill was withdrawn by the government.

The use of digital masks thus could be vital at a time when there is increasing global insecurity about data protection and privacy, and the use of facial recognition technologies. Digitally masking can also prevent patient data from falling under the wrong hands and is remarkable in devising a method where biometric information is edited in the process itself, thus lowering patients’ and databases’ reliance on biometric codes, and reducing the vulnerability of such unique and sensitive information. The software’s success on clinical trials indicates that if implemented large-scale, it can be a useful tool for privacy protection.

Amlan Sarkar is a staff writer at TheSwaddle. He writes about the intersection between pop culture and politics. You can reach him on Instagram @amlansarkr.

Related

How Facial Recognition AI Reinforces Discrimination Against Trans People