According to 3.5 Million Books, Women Are Beautiful and Men Are Rational

A new study highlights literature’s gender bias — as well as the dangers of AI trained on sexist language.

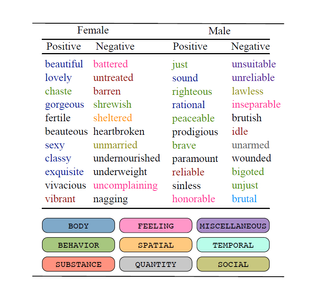

An analysis of 3.5 million English-language books published between 1900 and 2008 spotlights a deep gender bias in the literature. It concluded that women are twice as likely as men to be described by their physical attributes — “beautiful” and “sexy” being the top two adjectives used for women. Men, on the other hand, are most commonly described by their actions and attitudes — “righteous,” “rational,” and “brave,” being the most common.

The researchers, from the University of Copenhagen as well as U.S. institutions, used machine-learning algorithms to comb through 11 billion words across the collection of books, which included both fiction and non-fiction texts. In addition to analyzing the adjectives used to describe men and women, the team also analyzed the verbs (actions) used in tandem with these descriptions. They found women were five times as likely as men to be accompanied by verbs that conveyed negativity regarding their body and appearance.

A top-11 list of the most common adjectives used to describe men and women, compiled from an analysis of 3.5 million books. Image Credit: University of Copenhagen

The gender bias in what gets published and how it’s received — and therefore, in which authors’ perspectives and descriptions of women influence readers — attracted attention almost a decade ago, when news outlets reported that the authors of books reviewed, and the reviewers themselves, in the U.S. and U.K publishing markets, were overwhelmingly male.

More recently, and closer to home, writing in 2018 for Scroll, Jenny Bhatt points out that while reliable gender statistics within India’s publishing industry are difficult to come by, it remains by all accounts an industry that heavily favors men and their points of view. “Amazon India’s new-book-releases page often shows an ongoing and significant male author bias. This skew is more pronounced in some genres but, overall, male-centric points of view and narratives continue to have more validity and visibility than those of and by women,” Bhatt writes. As recently as last year, “longlists and shortlists of certain big awards are dominated by male writers. With commercial fiction, women writers may well be greater in number but they still don’t lead the bestselling charts as Twinkle Khanna recently pointed out.”

Of course, an industry’s gender bias in authorship doesn’t necessitate the sexist depictions of women revealed in the University of Copenhagen’s study — but it does facilitate it. While there are, of course, male authors who can write about women intelligently, drawing on more than just a handful of physical adjectives to describe them, in the vast canon of literature, there are more examples of those who do not, as the Twitter handle, @men_write_women, chronicles:

JG Ballard’s The Drowned World comparing Beatrice’s “long oiled body” to a “sleeping python”. Let’s not also miss that reference to her “beautiful supple body” either. *cringe* #menwritingwomen @men_write_women pic.twitter.com/yBmFlGFdXN

But the authors of the study are quick to point out that the danger posed by this literary review goes beyond reinforcing sexist gender norms among readers. The same kind of machine-learning algorithm used in the study is being used by many real-world applications, which could reinforce inequality even as it sheds light on it.

“The algorithms work to identify patterns, and whenever one is observed, it is perceived that something is ‘true.’ If any of these patterns refer to biased language, the result will also be biased. The systems adopt, so to speak, the language that we people use, and thus, our gender stereotypes and prejudices,” Isabelle Augenstein, an author of the study and an assistant profession in the University of Copenhagen’s Department of Computer Science, said in a statement. “If the language we use to describe men and women differs, in employee recommendations, for example, it will influence who is offered a job when companies use IT systems to sort through job applications.”

The world has already seen this in action: In late 2018, Amazon shut down a proprietary recruitment program that utilized machine-learning to review candidates’ CVs. Fed on the CVs of overwhelmingly male candidates, the artificial intelligence tool taught itself to downgrade “resumes that included the word ‘women’s,’ as in ‘women’s chess club captain.’ And it downgraded graduates of two all-women’s colleges, according to people familiar with the matter,” reported Reuters at the time.

Other recent examples of language imbuing AI with the same insidious gender biases found in real life include the Google Translate app’s infusion of gender stereotypes into genderless languages, and a human-AI collaboration to generate a fairy tale in the style of the Brothers Grimm in which “the text constantly reminds the reader that the princess is kind, good, joyous, and, most importantly, beautiful,” reported Quartz at the time. The only other female character, the queen, remains silent and nods; the multiple male characters get a much wider range of actions.

Related on The Swaddle:

From ‘Cunt’ to ‘Careerwoman’: the Many Ways in Which Language Propagates Sexism

There’s no easy solution to this — language informs even our most subconscious sexist beliefs as much as it reflects them, creating a social cycle of reinforced norms that resembles the learning cycle of a machine learning tool. Fixing our language and our AI tools requires fixing ourselves. According to Augenstein, being aware of our own biases is the best place to start — and the good news is, while humans may still struggle to make the necessary adjustments post-awareness, AI can be programmed to do it automatically.

“We can try to take this into account when developing machine-learning models by either using less biased text or by forcing models to ignore or counteract bias. All three things are possible,” she said in the statement.

Which suggests gender equality is, too — and this chapter is just the beginning.

Liesl Goecker is The Swaddle's managing editor.

Related

Multi‑Country Study Confirms Access to Specialized Therapy Reduces Reoffense Rates for Violence, Sex Crimes