Lawsuit Raises Copyright Concerns in AI‑Generated Work

The lawsuit against Github Copilot echoes concerns of copyright infringement and ethics in AI training and output.

Github Copilot, an AI tool that automatically suggests blocks of code to add as programmers type, has recently come under the scanner for the violation of open-source licenses. Earlier this month, a programmer and lawyer, Matthew Butterick, along with a team of lawyers, filed a class-action lawsuit in the US against Github Copilot, its parent company Microsoft, and AI-technology partner OpenAI, claiming that the tool profits “from the work of open-source programmers by violating the conditions of their open-source licenses.” While the lawsuit does not directly accuse the companies of copyright infringement, it echoes concerns raised by many creators in the past.

The people behind the lawsuit alleged that Copilot does not provide attribution when it reproduces code, violating the licenses governing open-source code, noted an article in Wired. While copyright concerns around AI-generated work are not new, experts speculate that this lawsuit, which challenges the very foundations upon which AI tools are being built, might have a significant impact on the future landscape of AI tech. Joseph Saveri, founder of the law firm behind the suit, called it the “first major step in the battle against intellectual-property violations in the tech industry arising from artificial-intelligence systems.”

The New York Times noted that the lawsuit may well be the first “legal attack” on the way AI is trained. AI tools are trained on large amounts of data scraped from the web. In other words, artificial intelligence is trained on work produced by human creators. Copilot, a preview of which was launched in 2021, is trained in a similar manner on “billions of lines of code” sourced from the web.

When it comes to the recent lawsuit, Saveri noted in a press release that programmers “will not be the last community of creators who are affected by AI systems.” Open source software code is designed to be freely shared and has helped drive the rise of many technologies available today, noted The New York Times article. However, this code is governed by licenses that Butterick claims have been violated by Copilot. “As a longtime open-source programmer, it was apparent from the first time I tried Copilot that it raised serious legal concerns, which have been noted by many others since Copilot was first publicly previewed in 2021,” he said. In fact, some programmers took to Twitter to highlight how Copilot is reproducing snippets of copyrighted code, almost exactly.

Related on The Swaddle:

Why AI Art Makes the Internet — And Art — Less Authentic

Explaining the importance of attribution in creative work, Butterick cited the example of an engineer in Europe who receives new clients through attribution. “‘I make open source software; people use my packages, see my name on it, and contact me, and I sell them more engineering or support.’ He said, ‘If you take my attribution off, my career is over, and I can’t support my family, I can’t live.’”

Herein lies the problem. Creators, including visual artists, writers and composers have called into question the murky ethics surrounding AI-generated work. Several artists are furious that their work has been used to train AI without first informing them or acquiring their consent. For instance, Hollie Mengert, a Disney illustrator, found that an AI model trained on her works was reproducing her artistic style. “For me, personally, it feels like someone’s taking work that I’ve done, you know, things that I’ve learned — I’ve been a working artist since I graduated art school in 2011 — and is using it to create art that that [sic] I didn’t consent to and didn’t give permission for,” Mengert said.

Many speculate whether the rapid pace of AI output generation based on text-based prompts will push the professions of creators to obsolescence. “This whole arc that we’re seeing right now—this generative AI space—what does it mean for these new products to be sucking up the work of these creators?” Butterick asked.

The lawsuit further states that “… Copilot’s goal is to replace a huge swath of open source by taking it and keeping it inside a GitHub-controlled paywall. It violates the licenses that open-source programmers chose and monetizes their code despite GitHub’s pledge never to do so.”

The questions on intellectual property and artificial intelligence have divided many, and no clear answers have emerged from the raging debate. For instance, can AI work be copyrighted? While this may depend on individual countries and their copyright laws, ArsTechnica reported in September that a New York-based artist had received US copyright registration on their graphic novel that features AI-generated art.

Related on The Swaddle:

Why Researchers Working on AI Argue It Could Cause ‘Global Disaster’

Whether or not AI should be trained on copyrighted data is a much larger question that has been justified in the US citing the fair use doctrine that encourages “the use of copyright-protected work to promote freedom of expression,” The Verge reported. How can AI be ethically trained using the work of others? Cardio Zirpoli, a lawyer at the Joseph Saveri Law Firm stated, “We’d like to see them train their AI in a manner which respects the licenses and provides attribution.”

In response to queries, Github said the company has been “committed to innovating responsibly with Copilot from the start, and will continue to evolve the product to best serve developers across the globe.” Wired further quoted Thomas Dohmke, the CEO of GitHub as stating that Copilot has a feature that prevents copying from existing code. “When you enable this, and the suggestion that Copilot would make matches code published on GitHub—not even looking at the license—it will not make that suggestion,” Dohmke said.

The suit is still in its early stages and has not yet been granted class-action status. Whether or not it will have the kind of massive impact some expect it to, remains to be seen. Still, the lawsuit serves to highlight the blurred lines of legality around AI-generated work, pushing for greater accountability. As Butterick told The Verge, “AI systems are not magical black boxes that are exempt from the law, and the only way we’re going to have a responsible AI is if it’s fair and ethical for everyone. So the owners of these systems need to remain accountable.”

Ananya Singh is a Senior Staff Writer at TheSwaddle. She has previously worked as a journalist, researcher and copy editor. Her work explores the intersection of environment, gender and health, with a focus on social and climate justice.

Related

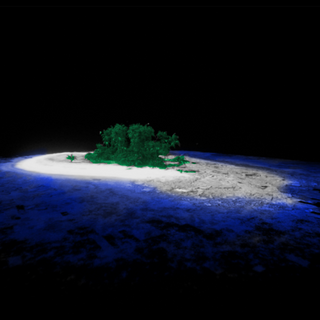

Island Nation to Upload Itself to the Metaverse as Climate Change Threatens Existence