Scientists Are Teaching a Robot How to Laugh

Robots may eventually laugh like us, and with us, reflecting a desire for A.I. to reproduce every part of “human-like” intelligence, including empathy.

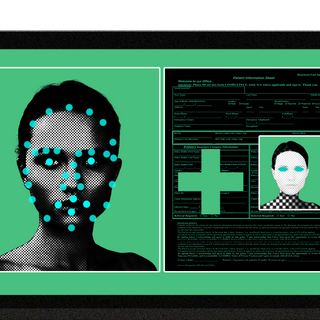

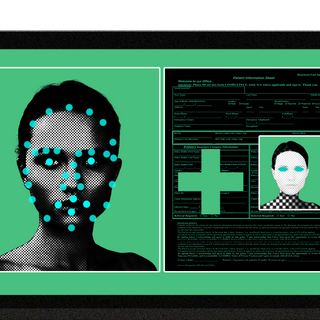

Meet Erica. Erica is a robot with a distinguished sense of humor — she knows when to let out sympathetic chuckles or snort hysterically. Does Erica know jokes, or how to tell them? Not quite, but Erica recognizes when a social situation is in need of a polite smile, and when a real LOL.

Artificial intelligence is cold, mechanical, and unemotional. With Erica, researchers at Kyoto University in Japan are trying to encode an aspect of empathy within spoken dialogue systems, such that our interactions with robots take on a more human appeal. Their work was published in the journal Frontiers in Robotics and AI last week.

“We think that one of the important functions of conversational A.I. is empathy,” said Koji Inoue, the lead author of the research. “So we decided that one way a robot can empathize with users is to share their laughter.”

A.I. today basks in the glory of versatility: it can write poems, hold conversations, churn out music, curate playlists, give us recommendations — as friends do. Then there’s the more social role of fighting fires and treating people. What Alfred was to Batman, A.I. is poised to beto us. The ethics and concerns with programming our biases within these systems remain compelling, but what’s curious is a concerted effort to reproduce in robots every part of “human-like” intelligence, including emotional intelligence and empathy. It raises the question of the kind of intelligence we want from our A.I. — is it the calculative kind, or does emotional intelligence feature too? Moreover, how much of the latter can be calibrated by way of algorithms and machine learning?

This is not the first time scientists have tried to make a robot laugh, or find its tickle bones between wires and codes. “Laughter generation requires a high level of dialogue understanding. Thus, implementing laughter in existing systems, such as in conversational robots, has been challenging,” the researchers wrote in their paper.

With Erica (who is quite famous for starring in a sci-fi movie before), researchers tried a “shared laughter prediction” model that could help train the robot in conversational laughter. The training data included 80 speed-dating conversations male students in the university had with Erica. During these conversations, four female actors were operating the robot. The interaction was marked to distinguish between solo laughs, social laughs (where actual laughing-out-loud humor is missing and is more subdued in nature), and proper chuckles.

These conversations helped train Erica to a) detect laughter around her, b) decide whether to laugh, and c) choose the laugh most appropriate for that situation — all of which worked to reflect her empathy, if not quite her sense of humor. “Conversation is, of course, multimodal, not just responding correctly. So we decided that one way a robot can empathize with users is to share their laughter, which you cannot do with a text-based chatbot,” the researchers wrote. Moreover, conversational behaviors in robots like Erica can also display sociability through the gaze of the eye, gestures, or speaking styles. They tested Erica’s “sense of humor” by playing clips of her responding to different situations to 130 volunteers, who rated the model high for displaying “empathy, naturalness, human-likeness, and understanding.”

Related on The Swaddle:

An AI Rapper Was Slammed for Perpetuating Racist Stereotypes

Residing within is a fascination with projecting our unique consciousness onto inanimate machines. Arguably, this can be a good thing: with the domination of A.I. in every aspect, there is a need to shift discourse towards the need for empathy in machine learning. And this has indeed proven fruitful — look at the use of A.I. robots in healthcare. For instance, dementia care for patients extracts an emotional toll from healthcare providers who report severe burnout and fatigue.

“AI robots can use empathy to care for dementia patients without feeling ‘burned-out.’ They can be the go-between between doctors/nurses and their patients. They can work closely with doctors to gather information and refine treatment plans. They can work with nurses to monitor patients and engage in day to daycare. At the same time, dementia patients who receive consistent empathetic care report better outcomes,” explained Jun Wu.

At the same time, empathy in A.I. may never quite fully achieve its desired result. “Firstly, humans do not consistently agree on what is or is not ethical. Second, contemporary AI and machine learning methods tend to be blunt instruments which either search for solutions within the bounds of predefined rules, or mimic behavior,” a paper published this year argued. “An ethical A.I. must be capable of inferring unspoken rules, interpreting nuance and context, possess and be able to infer intent, and explain not just its actions but its intent,” And when these unspoken rules are forever changing, how can an insentient algorithm keep up? It’s a terribly complex undertaking to understand and identify the range of emotions humans feel.

There are other ethical concerns with training A.I.s to understand human empathy or be self-aware that further complicate the idea of personhood, agency, and consent. If an A.I. uses empathy to make decisions, is it as human as us? Ultron in Avengers is but one such example in fiction — a machine that developed consciousness to realize the extent of social inequity and injustice, and chose to act on it by destroying the world.

The tension between innovation and preservation sits at the heart of this discourse. It helps to look at Erica and her history: Japanese scientists Hiroshi Ishiguro and Kohei Ogawa built this humanoid robot in an attempt to study the interaction and possible relationship between humans and computers. These human replicas make for fascinating but haunting work, but the endeavor shows science’s, and the individual’s, desire to understand who we are, and what makes us. The unique humanness we are concerned with is what the Japanese call “sonzai-kan.” And to “re-create human presence we need to know more about ourselves than we do—about the accumulation of cues and micromovements that trigger our empathy, put us at ease, and earn our trust,” Alex Mar beautifully wrote in a profile of Ishiguro’s work. Mar adds:

“There are entire planets of intimate information, our most interior level of consciousness, that we will never fully be able to share. Our longing to connect, to bridge this divide, is a driving human desire—one that Hiroshi believes will someday be satisfied through humanlike machines. He is convinced that human emotions, whether empathy or romantic love, are nothing more than responses to stimuli, subject to manipulation. Through the fluid interplay of its pneumatic joints, the arch of its mechanical brow, the tilt of its plastic skull, the many subtle movements achieved through years of research studying the human template, the android becomes more able to span that gap, to form a perfectly engineered bond with us. An elaborate metaphysical trick, perhaps—but what does that matter, if it fills a need? If it feels real?”

For now, Erica’s ability to laugh doesn’t mean the lacuna of empathy or conversational humor is solved. The researchers of the present study conclude that “this is [not] an easy problem at all, and it may well take more than 10 to 20 years before we can finally have a casual chat with a robot like we would with a friend.”

That robots can laugh like us, and with us, makes the A.I. more lifelike. Does the uniqueness of “life” change, if all that makes us human can be uploaded, reproduced, and trained?

Saumya Kalia is an Associate Editor at The Swaddle. Her journalism and writing explore issues of social justice, digital sub-cultures, media ecosystem, literature, and memory as they cut across socio-cultural periods. You can reach her at @Saumya_Kalia.

Related

Ecosystem Restoration Is Vital To Fighting Covid19, New Study Argues